Creating Magic Cards Part 2: Creature Types

In order to create some creature types, we introduce simple language models.

Links to the rest of the series:

Welcome to part two of our Magic card creation series. This time we're getting to the code and actually building something!

Language Models

We use language every day to communicate with one another.

All languages have some form of underlying structure, which we call grammar. This underlying structure is necessary if language is going to be effective, as it sets common rules that speakers and listeners both agree to.

Language Models attempt to capture some of that underlying structure in order to perform tasks like natural language generation.

In its simplest form, a language model is basically a probability distribution of words.

Modern language models are extremely complex and leverage neural networks with millions or even billions of parameters. We'll see those later in this series, but for now we're going to create a very simple language model.

Conditional Probabilities

Conditional probability is the idea that the likelihood of an event occurring can change based upon the occurence of a second, related event. For example, the conditional probability that it will rain today given there are dark clouds in the sky is much higher than the conditional probability that it will rain given the sky is clear. We calculate conditional probabilities in our heads all the time without even thinking about it. Consider the following example:

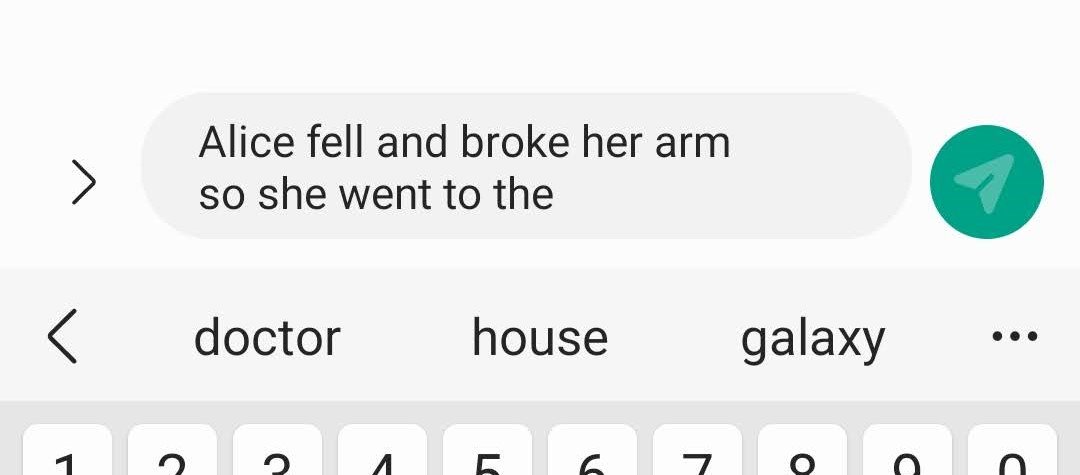

Alice fell and broke her arm earlier today so she went to the...

You probably have a pretty good idea of what word comes next. It's probably something like 'hospital' or 'doctor's office'. You probably wouldn't predict the next word to be 'moon' or 'bank'.

That's because language has an underlying conditional probability distribution, and you've learned some of it without even trying.

N-Gram Models

If you used a smartphone in 2015, you probably used an n-gram model without realizing it. Many predictive text keyboards used this technology. They tried to figure out what were some of the most likely next words, based upon the last n words you typed.

So what is an n-gram? It's just a collection of n words. For example, when n=3, we have a trigram. Trigrams are 3-word sequences such as (Alice fell and), (fell and broke), (broke her arm) etc.

We can take a text and split it up into all of the ngrams, and from there compute conditional probabilities.

Let's dive into that a little deeper. How do we turn these n-grams into a language model? The first step is to split up our reference text into every n-gram that appears in the text.

For this example, we'll use a bigram (n=2) model with a two sentence reference text.

Alice went to the store

Alice ate dinner and went home

The first thing we do is add special START and END tokens to our sentences, so they end up looking like this.

START Alice went to the store END

START Alice ate dinner and went home END

Then, we calculate all of the bigrams in the document. We get:

(START Alice), (Alice went), (went to), (to the), (the store), (store END), (START Alice), (Alice ate), (ate dinner), (dinner and), (and went), (went home), (home END)

What do we do with all of this? we build a basic conditional probability distribution and use it to generate sentences.

We start our model off with the special START character. Then we ask it to choose, based on the probability distribution, what the first word in the sentence should be.

The model looks at all of the bigrams, and realizes that if the first half of the bigram is START, the next word has to be Alice.

We repeat the process, asking for a continuation. Since the bigram model only has two continuations after Alice, it has to choose between went and ate.

These are equally likely according to our model, so it chooses at random.

This process repeats until the model hits the special END character, at which point it knows the sentence is done.

How effective are n-gram models at text generation? It depends on what you're going for. Here's an example of a sentence written by a trigram model trained on all of William Shakespeare's sonnets:

Past cure I am forsworn, but these particulars are not kept hath left me, richer than wealth, some wantonness, some in their glory die.

That sounds like Shakespeare, but it doesn't really make sense. Since n-gram models only look at a limited context they can't produce cohesive sentences, just probabilistic approximations that 'sound right'.

Creature Types

If you need a refresher on Magic cards, you can go back to the first post in this series. A card's creature type is the line of text in the middle that describes the creature's species, and sometimes its class.

Our n-gram model is based on over 12,000 creature types collected from unique Magic: the Gathering sets from Alpha (1993) to Kamigawa: Neon Dynasty (2022). Because the n-gram model is predictably probabilistic and simple, the distribution of creature types it creates should be similar to the distribution in our dataset, which reflects real Magic cards.

Try it Out!

I've recreated the n-gram model in javascript, which means you can try it right here on this page. There's both a bigram and a trigram model available.

These are pretty good. Almost every one could be a real Magic card. In all honesty, they're not ususally super exciting, and that's because they are realistic. We could introduce some more randomness at the cost of

Moving Forward

Now that we have a good way to generate creature types, we've taken the first steps towards creating our own Magic cards. Next time we'll finish creating the text for our cards.

Acknowledgements

- Data for this project was provided by Scyfall and used in accordance with the Wizards of the Coast fan content policy.

- Here's apple's n-gram predictive text patent from 2015.

- If you want to learn more about n-gram models, here's a great textbook chapter.