VirtualPet Dream

I trained an AI to 'dream' up virtual pets.

Virtual Pets

When I was growing up, fake pets were all the craze. I remember my friends showing an endless stream of petlike toys, from Furbies to poo-chis, Pokémon to Digimon, Neopets to Tamagochi. The only ones I had access to were the free virtual pet websites like Neopets, one of the most popular sites in the early 2000s. My brothers and I spent countless hours playing simple flash games so we could feed and groom our virtual pets. The virtual pet craze continued for a few years with products such as Webkinz, but largely died out during the 2010s.

I love nostalgia, and in search of the childhood high that comes with creating a new 'friend', I set out to use AI to create my own virtual pets.

Training a Model

When I first showed this project to my family, one of my brothers asked me, "How do you train a neural net?" My answer was simple, yet explanatory:

You take the neural net and show it a bunch of samples.

That's really all it is. Obviously, the particulars are more complicated than that, but that's a great working definition.

If you want to train a neural machine translation system, you feed it a bunch of pairs of sentences in both languages and let it figure things out.

For this project, I showed it a bunch of virtual pet images scraped from the internet, and let it decide what a virtual pet looks like.

Conditional vs Unconditional Generation

Text to Image models do something called conditional generation. The image they create is conditioned on some input text. Creating a Diffusion model for conditional generation is a little beyond the scope of this project, so I decided to do unconditional generation. This means the model takes no prompt, but rather generates the image randomly. If the model's only been trained on one type of image, it will only generate something similar to those inputs.

Denoising Diffusion Models

Denoising Diffusion Models are currently the state-of-the-art when it comes to generating new images. You may have seen examples on the internet generated by Openai's Dall-e2 or Google's Imagen.

The idea behind them is pretty interesting.

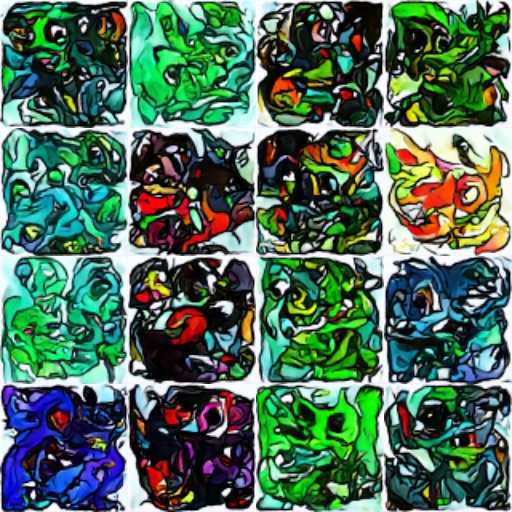

Essentially, you take a model and show it a bunch of pictures. Then you incrementally add more and more static (noise) to the image, and try and get the model to 'undo' the static. This is the 'denoising' part of denoising diffusion.

After training the model to reconstruct a bunch of images, you give it a bunch of noise, and tell it to incrementally remove static to reveal the picture. The model 'cleans up' the static, creating a new image.

Demo

I entered this model in the Gradio NYC Hackathon. As part of the contest I made a demo that you can try yourself.

It runs very slowly, so you'll need to leave the tab open for 10-20 minutes to generate some pets.

You can try the demo here.

If you have some basic python skills, you can run the colab notebook and generate new pets pretty fast.

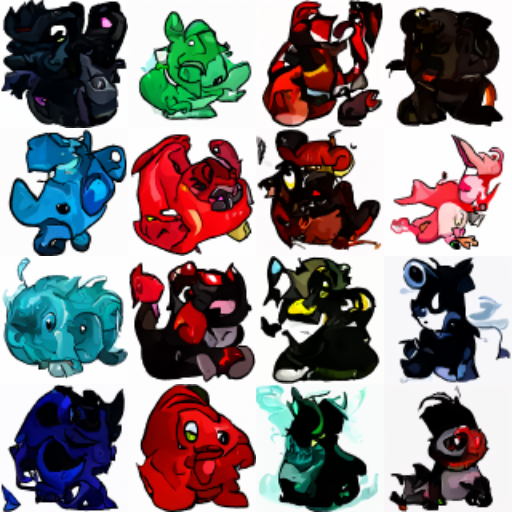

Gallery

Conclusion

Overall, I was pretty happy with how my pets turned out. They often look abstract and a little wonky, but this was a great first attempt at training an image generation model from scratch.