State of Generative AI - January 2026

How good are current AI tools? What should you watch out for? What can you use?

Rapid Progress of Generative AI

We live in a time of crazy AI progress. Things that we couldn't have dreamed of a couple of years ago are now very real possibilities at anyone's fingertips. This post details a few of my thoughts on the capabilities of public-facing tools. Rather than focusing on the advancements of these models in a highly technical way, I'm going to talk about their downstream capabilities in an everyday context. How are people using them? What should you watch out for? How could YOU take advantage of generative AI? I hope to give you a feel for what is going on right now, what tools are available to you, and what you should watch out for encountering AI in the wild.

I'll touch on several modalities of modern generative AI.

Before we get into that, I want to remind you of the notion of the Turing Test. Named for famous mathematician Alan Turing, a system can pass the Turing Test if it is impossible to distinguish its behavior from that of a human. In text, audio, and image generation models can pass the Turing test in various contexts. Sometimes, people can't tell whether an AI wrote or said something. Sometimes, people can't tell whether an image or video was made with AI.

Text Generation

Large Language Models (LLMs) debuted around 2018 with models like the original GPT and BERT. Enabled by a new neural network architecture called the transformer, LLMs learn the probability distribution underlying language. They do this by learning from trillions of tokens (words), scraped from books and the internet. While LLMs learn language, they acquire Emergent Abilities, which are skills they have that they weren't explicitly trained to gain. For example, even though nobody ever sat down and decided to teach GPT-3 math, it knows how do do basic math just from reading language with math in it.

You have probably used an LLM at this point, even if you didn't mean to. Popular ones include OpenAI's ChatGPT, Gemini from Google, and Grok from Twitter. When you do a Google search, the AI Mode response is composed by an LLM.

Like all of the modalities of AI we're discussing here today, progress has been super rapid. Text written by AI wasn't even close to human level until 2019, when OpenAI's GPT-2 wrote the famous "Ovid's Unicorn" story. Researchers gave the model the following prompt:

In a shocking finding, scientist discovered a herd of unicorns living in a remote, previously unexplored valley, in the Andes Mountains. Even more surprising to the researchers was the fact that the unicorns spoke perfect English.

GPT-2 completed the story as follows:

The scientist named the population, after their distinctive horn, Ovid’s Unicorn. These four-horned, silver-white unicorns were previously unknown to science.

Now, after almost two centuries, the mystery of what sparked this odd phenomenon is finally solved.

Dr. Jorge Pérez, an evolutionary biologist from the University of La Paz, and several companions, were exploring the Andes Mountains when they found a small valley, with no other animals or humans. Pérez noticed that the valley had what appeared to be a natural fountain, surrounded by two peaks of rock and silver snow.

Pérez and the others then ventured further into the valley. “By the time we reached the top of one peak, the water looked blue, with some crystals on top,” said Pérez...

In retrospect, that doesn't seem too impressive. Not everything the model says makes a lot of sense. For example, Perez's supposed quote, "By the time we reached the top of one peak, the water looked blue, with some crystals on top," has nothing to do with the unicorns.

In spite of these limitations, this example created a small moral panic back in 2019. People were afraid of how this powerful technology could be misused, causing OpenAI to delay release citing safety concerns.

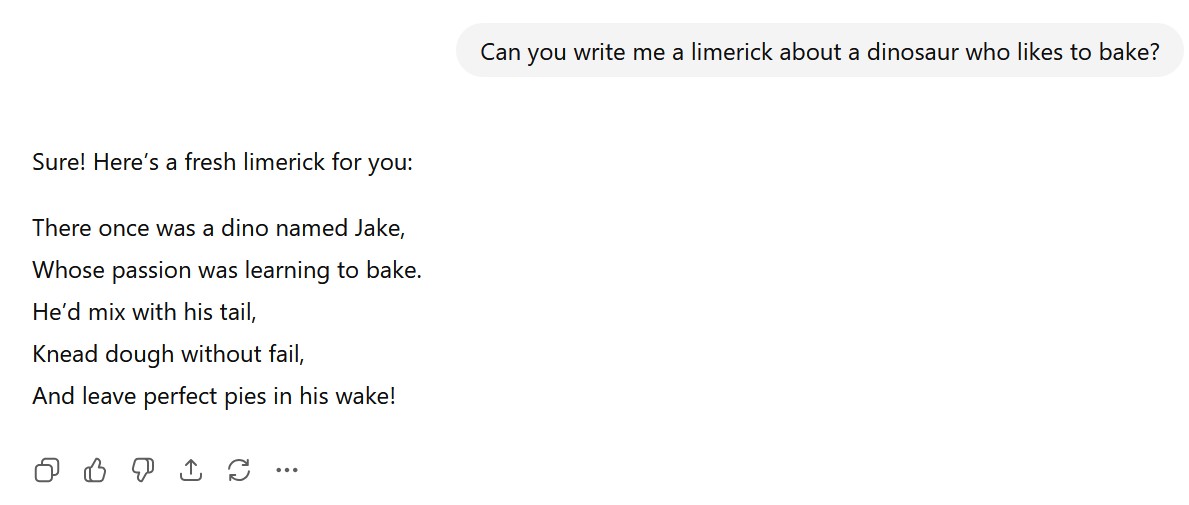

LLMs have come a long way since then. They are capable of following complex instructions perfectly, and can perform many tasks better than the average person. As an example, I had ChatGPT write me the following poem, which it did in under one second:

The instructions are non-trivial. In order to do this, ChatGPT needs to understand limerick structure, stress, and rhyme, as well as have appropriate world knowledge to understand baking and dinosaurs. Finally, it needs to be able to synthesize these into a novel domain and structure. (Dinosaurs did not bake)

And that sort of thing was impressive when ChatGPT first came out, but it's gotten so much more capable in the last couple of years. If I ratchet up the difficulty and poetic constraints, ChatGPT still does a good job in just a couple of seconds. Notice how well the model follows fairly difficult instructions about style and content.

In one of my first blog posts, I had to fine-tune GPT-3 on many, many poems, and it didn't do nearly as well and wasn't nearly as steerable. (I never could've gotten it to write a poem about a baking dinosaur, let alone one with metrical constraints like this.)

Poetry is just one small example. In most domains, LLMs pass the Turing Test. That is, they write text that is indistinguishable from human-written text. This text is being used in day-to-day contexts.

Real-World Applications

AI text generation is effective in real-world contexts. I've published research that shows that LLMs are as (or more) effective than humans at political persuasion. That's a big deal.

Many of the comments you see on social media are written by bots driven by LLMs. People are trying to sway public opinion en masse using these LLM bots. Even before LLM comment bots, it probably wasn't healthy to care too much about strangers' opinions on social media. Now, you for sure shouldn't care about what LLM bots say online.

There are many examples of Twitter users having been revealed to be bots. One simple technique involves instructing the potential bot to perform a task as though it were an LLM. Usually, the bot becomes confused, stops acting in its assigned role, and performs the new task.

AI Coding

LLMs are obviously highly proficient at generating natural language text. They are also really good at generating a different kind of text, computer code. For example, Google's Gemini model did well enough to have earned a gold medal at the international collegiate programming contest.

As with most software engineers, I use AI coding as part of my workflow. Especially when it comes to low-stakes applications, or things I'm not very good at, like graphic design. For example, the interface for my Bible Semantic Search tool was coded by AI. However, there are many potential pitfalls to coding with AI. Overall, it seems best used as a productivity increase for people already proficient in coding.

How can YOU use text generation?

You can start using LLMs right now! Providers like OpenAI (ChatGPT) and Google (Gemini) have web interfaces with free tiers. If you use LLMs a lot, you can pay a monthly subscription fee to use smarter models and not experience rate limits. LLMs are useful for much more than poetry generation. However, there are several things to watch out for when using AI to write for you.

Things to watch out for

As with any tool, LLMs come with several pitfalls and issues that the wise user needs to watch out for.

AI 'style'

If you just have ChatGPT (or any other LLM) do writing for you, it will invariably write in a specific and very distinct style. In fact, I NEVER have LLMs write for me. While this style isn't necessarily easy for everyone to detect, people who use LLMs regularly have a very good intuition for detecting LLM writing. This 'LLM voice' can feel artificial and off-putting. Below is a paragraph, written by ChatGPT, that exhibits this LLM voice.

You need to be careful when letting LLMs write for you—mostly because the writing has a habit of sounding familiar in a very specific way. It’s not that the ideas are off, it’s that the phrasing leans toward tidy contrasts, smooth transitions, and those helpful little em dashes that keep everything moving along. The tone often explains itself just a bit too clearly, favoring balance and polish over idiosyncrasy. None of this is disastrous—it just means the voice can start to feel generic unless you step in and rough it up a little.

Some instances of LLM writing are pretty clear. LLMs favor em-dashes (—), and often use the it's not this, it's that construction. However, these can be present in human writing as well. While many firms have created 'AI detectors' for text, they're not super reliable and you shouldn't rely on them to tell whether text was from an LLM.

Hallucinations and RAG

Another common issue with LLMs is their tendency to hallucinate, which is the technical term for when the LLM makes up something that is untrue. LLMs are trained to generate responses that people like. However, this can cause the models to make up something it thinks you would like instead of saying, "I don't know."

There were wide-reaching stories in the last couple of years where lawyers submitted documentation including nonexistent cases hallucinated by an LLM. How can you, as a user, get around that?

The answer is something called RAG (Retrieval Augmented Generation). You may have interacted with RAG systems like Google's AI mode or Perplexity. How these work is that when you ask the LLM a question, it looks up a bunch of relevant answers, maybe in a database or maybe from the internet. Then, it reads the sources really fast and generates a response, citing its sources. This lets you know where the information came from, and you can check it yourself.

If you're using an LLM in a high-stakes context, you should make sure your LLM is a RAG system that can cite its sources.

Bad Uses of Text Generation

One other pitfall to avoid with LLMs is their potential bad use cases. Some of these are obvious. Even though LLMs are trained not to do bad things (like give you bomb-making instructions or help you find drugs), sometimes models can be 'jailbroken' to follow bad instructions.

However, I assume that my readers aren't trying to use LLMs for crime. I am worried specifically about peoples' emotional attachment to chatbots. According to a recent Pew study, at least 1/3 of teens use chatbots daily. On top of that, a Common Sense Media study showed that around 3/4 of teens have used AI chatbots for emotional companionship or therapy. We've seen a huge decline in teen mental health over the last 10 years corresponding with an increase in social media like Instagram. It took years to observe and pinpoint the causes of this decline. Many experts warn against using AI for companionship or therapy. I agree with them!

Don't use LLMs as friends or romantic partners!

Text generation AI is really exciting! It's powerful, and can do a lot of really cool stuff. In spite of potential bad use cases, you should consider using AI to help in your daily life. But it's more than just text.

Image Generation

Just four years ago, I wrote a blog post on generative image models. Here's one example I was really excited about.

AI image generation has gotten a lot better over the last 5 years. You can use systems like Gemini Image Pro 3 (Nano Banana) or ChatGPT's image generator to (basically instantly) make a picture of almost anything. Here's Nano Banana's generation of a Teletubbies Orthodox Icon:

Fears Around AI Image Capabilities

Now some people are a little afraid of AI image generation. Pretty much anybody can now make a picture of pretty much anything. If you see a picture of something unbelievable on the internet, there's a good chance it's an AI image.

But something similar happened 10-15 years ago with photoshop. There was a big, "you can't believe your eyes" scare, and we've figured out how to cope with that. In fact, there was also a little bit of a moral panic about 100 years ago, when cameras became widespread and it was discovered that film could be edited. Essentially, people have been able to fake convincing images for as long as we've been able to produce convincing images. This may be a new scale, but it's not a new problem.

Can You Tell if an Image was Created With AI

There have been a number of tricks to help people distinguish AI image from real ones. You may have been told to 'count the teeth' or 'count the fingers' in a generated image. While image generation models have had trouble with teeth and fingers in the past, they keep getting better and better, and these tricks are no longer reliable. Other weaknesses of image generation models, like an inability to generate text, are diminishing as well (check out the correct teletubbies names above).

So is it even possible to tell if an image is generated by AI? Many people are making AI images with outdated models, and it's fairly easy to find inconsistencies in those pictures. Scouring an image may reveal small details the model got 'wrong'.

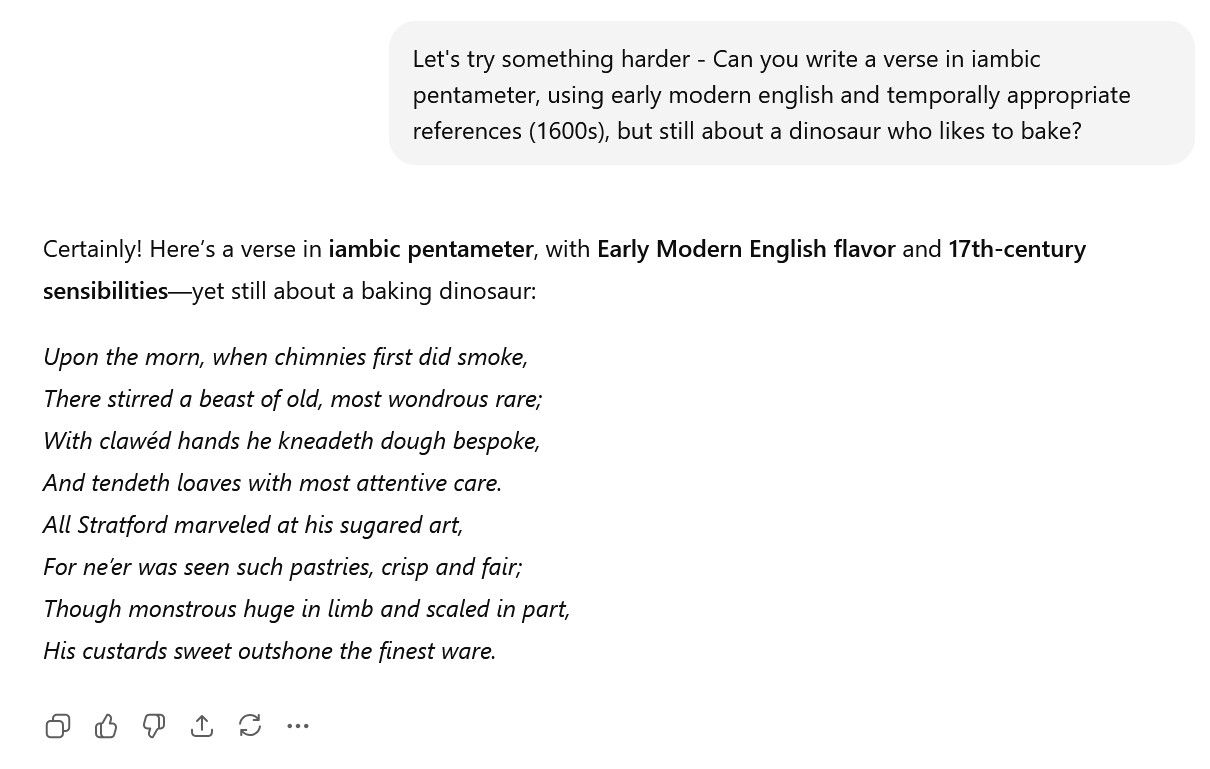

But maybe not! Below are two pictures. One is a sandwich I made, and one is generated by Nano Banana based on the picture I took. Can you tell which is which? How did you know?

Many companies watermark their AI-generated images, and others require disclosure of AI-generated content. However, it's not that hard to remove a watermark or lie about your AI use. The only way you can be sure an image is not created with AI is if it comes from a reputable source. While many images have a distinct 'AI-Generated' style, not all models do, especially newer and more capable models.

Steerability

I have access to Gemini Image Pro 3 (Nano Banana) right now. It is significantly better than other image generation models in one aspect - steerability. Models like Nano Banana can execute much more complex instructions than previous models. However, this has some limitations.

As a case study, I wanted to make an icon for a group chat with me, my wife, her siblings and their spouses, and my mother-in-law. To do this, I gave Nano Banana pictures of all of us and had it make a little drawing of the family. I had to instruct it to make all of the women the same height (my wife and her sisters and mom are all about the same height.) I also had to give explicit instructions about a couple of people's hair and size. Then, I tried to position everyone in the right spot but I couldn't get it all to work out. No amount of prompting would make one of my brothers-in-law taller than the other one. No amount of prompting could make me stand next to my wife without ruining the rest of the picture. I'm not the tallest one in real life, but I could not for the life of me make everyone the correct relative height. As a result, it looks kind of like I'm standing on a box behind the rest of the family. Here's the picture.

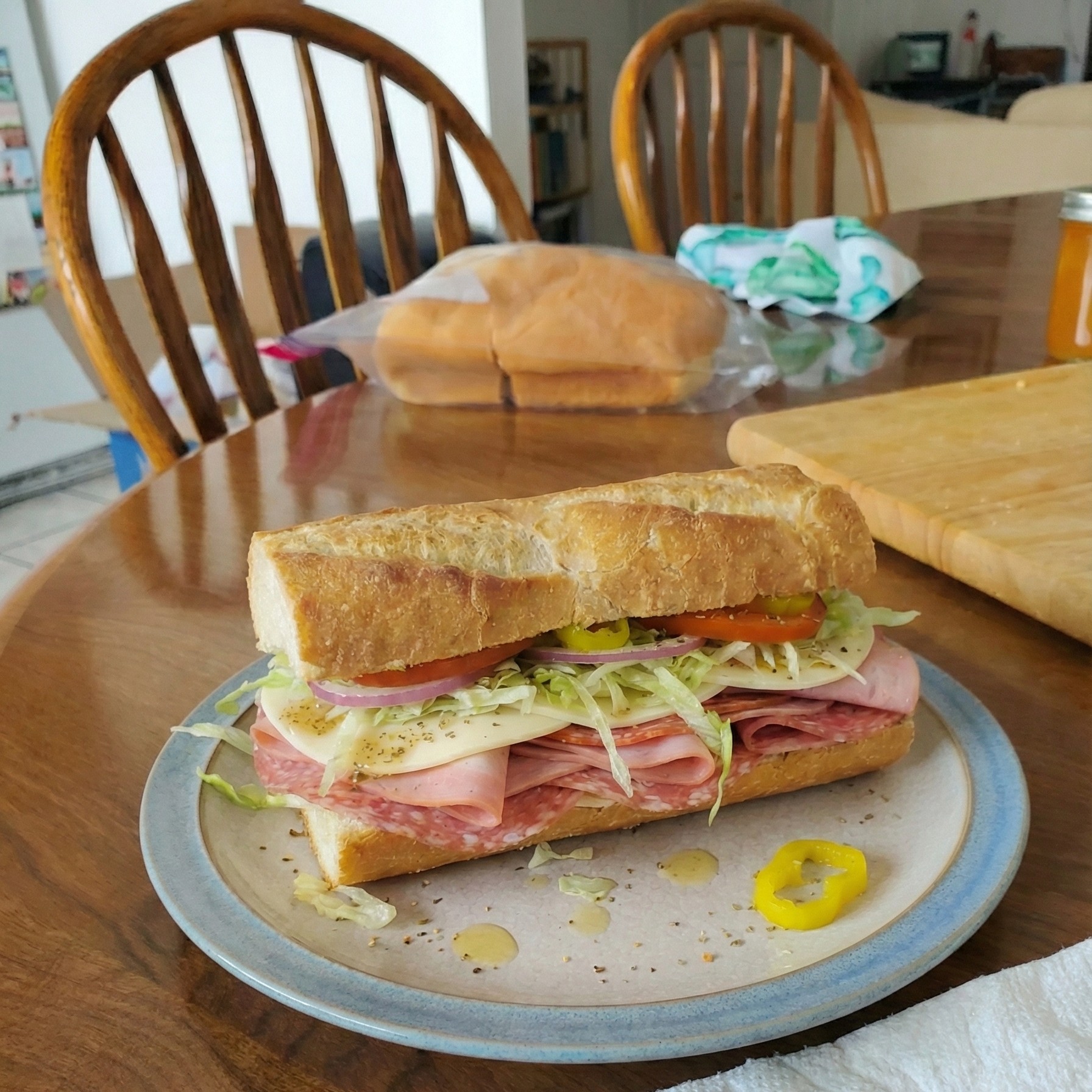

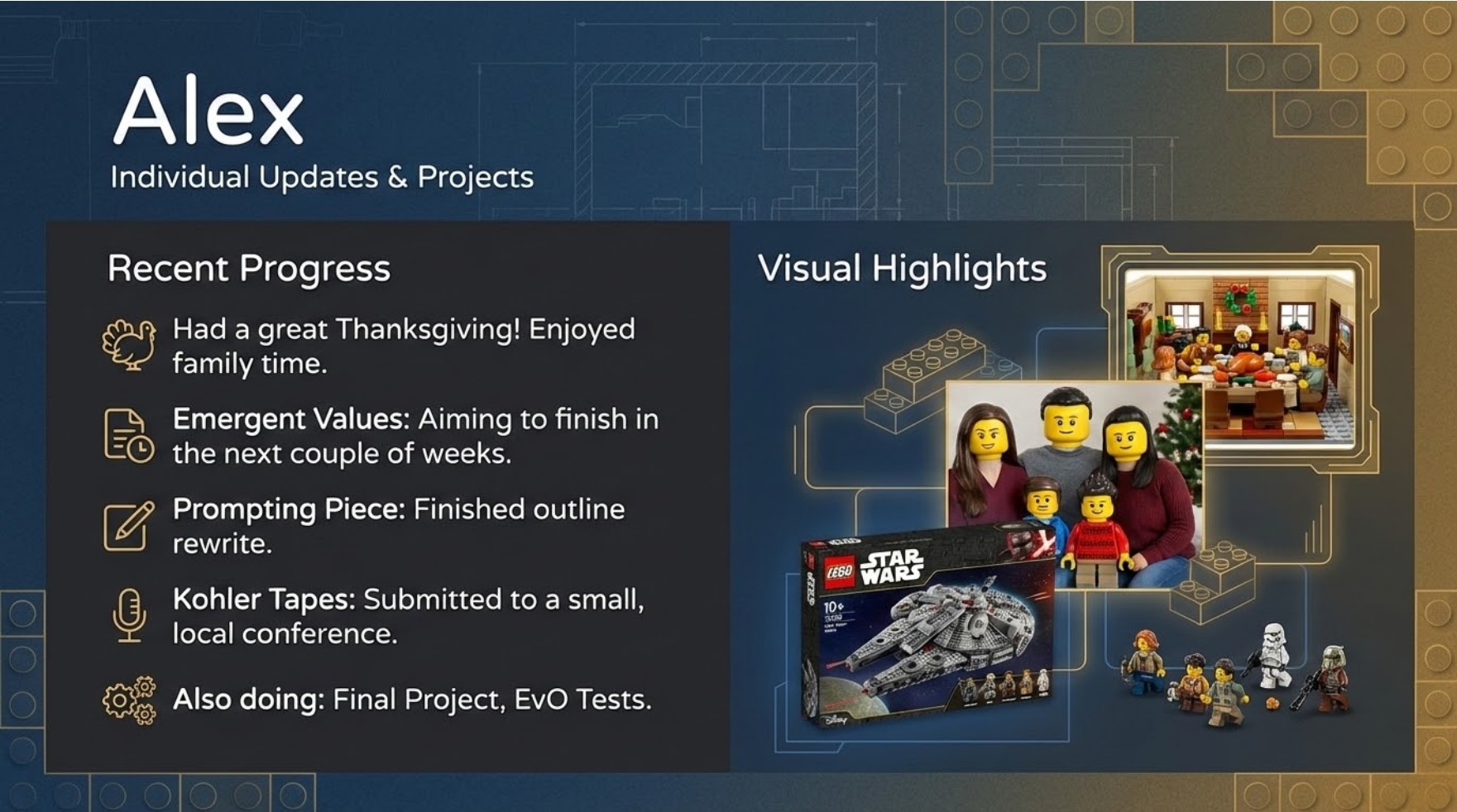

Even though these models are often very impressive, they sometimes don't do as well. As an example, each week my lab has lab meetings. We create a shared Google Slide deck, and each lab member creates a slide. I often include photos of my Lego creations, and pictures of fun events, in addition to text about my lab-related progress. The other week, I created my slide as normal.

When I was done, a little icon appeared at the bottom of my screen, urging me to 'beautify' my slide using Nano Banana. Companies are so excited to integrate AI tools in their products that we are bombarded with AI suggestions in our email, search engines, text editors, and more. I decided to try out the "beautify" function on my slide.

I thought this was very funny. Nano Banana inserted text that I "Enjoyed family time". Much funnier was that it just replaced my original Lego creations with some Legos it imagined. Based on the Legos and the family photo I included, it also made one family photo of weird hybrid Lego humans.

I will not be trusting Nano Banana to 'beautify' my slides any time soon.

AI Image Generators are not the Same as LLMs

Image generation systems can be integrated with LLMs, enabling you to give detailed text instructions about what image you want to create and/or edit. However, it's important to realize that the image generation system and the language model are not the same system. Just because you ask for the image in the ChatGPT interface doesn't mean that ChatGPT is the model creating the image. I've seen multiple videos on social media where people will ask ChatGPT to generate some image like a map or sheet music. Then, when the image has something wrong, they're like, "See, ChatGPT doesn't know where the states are" or "ChatGPT doesn't know the notes to Silent Night". ChatGPT has vast geographical and musical knowledge, but the image model might not.

It is incorrect to make assertions about an LLM's capabilities based on an image generated by a different model on the same website.

AI 'Slop'

One issue we saw shortly after AI image generation came out was the proliferation of AI 'slop'. This 'Slop' is meant to garner views, and usually mixes several keywords/things that older people like to see on Facebook. Examples include: the disabled, people in poor countries, religious motifs, cute animals, beautiful women, people doing notable crafts. These are generated and published at an alarming rate to farm views, with little to no quality control. Here are a couple of examples.

Social media companies have cut down on AI slop images, but AI slop videos are increasing. (We'll cover that more in the video section.)

How can YOU use AI images?

As with AI text generation, AI image generation is widely publicly available via mainstream AI providers like OpenAI and Google. Both ChatGPT and Gemini incorporate image generation models into their interfaces.

I really like using AI image generators to create bespoke images for slideshows and blog posts. I would've used public-domain images otherwise, but this way I can tweak the image to my liking. Also, since most of my posts are technical, it feels appropriate to incorporate images with an AI aesthetic. The thumbnail for this (and several other) of my blog posts is generated by AI.

Anti-AI Art Sentiment

However, I have noticed anti-AI Art sentiment online. Many people are really mad/scared at the idea that AI can create images well, often because they see it as displacing human creatives. People have even led boycotts of firms they perceive as using too much generative AI. I don't feel strongly on the issue personally, but it is important to realize that using AI images may make a subset of your audience quite upset.

Audio Generation

I have a paper under review where we paid thousands of people to take a political phone call. Unbeknownst to the respondents, the person they were talking to was an AI with an AI voice. Only about half of the respondents realized they were talking to an AI.

AI voices are often cloned from a real person. In our paper, we did an extensive pilot study and found that humans were able to correctly infer characteristics of the AI voices. For example, people could guess the person's ethnicity and gender with super high accuracy. These voices are perceived as being very lifelike and have many human characteristics.

When I was growing up, computer-generated voices sounded robotic and distorted. Here's Microsoft's SAM (1998) saying how much it likes pizza.

What does a fully AI-generated voice sound like? Here's a voice from OpenAI, reading the same script with expressive emotions. This is just one example, showcasing one emotion. AI voices can accurately capture the range of human emotion.

Just as with AI images, the downstream effects of this are potentially a little worrisome. Scammers can convincingly clone the voice of your loved ones with just a small sample of their voice. LLM-powered phone calls are being regulated by the FCC, but they'll have an easier time sliding under the radar as the voices get more and more advanced.

AI-Generated Music

One other interesting example is that AI-generated music has been getting more and more advanced. You may have heard that an AI-generated song was #1 on the Billboard country chart. That's not quite an accurate representation of what happened. In reality, the song, "Walk My Walk" reached No. 1 on the Country Digital Song Sales chart. This is not a 'listening popularity' chart, but a 'number of purchased downloads' chart.

The song only needed to sell 3,000 copies to do that. That means that since the song cost $1, they could've 'topped the chart' for about 3 grand.

In spite of all of that, it's pretty crazy that fully-AI-generated music is good enough for anyone to buy it at all. Content that is 100% generated by an AI model is not currently copyrightable under US law, so the song's in the public domain. That means I can put it right here in my blog post for you to listen to.

Generating music can be really fun. One service, Suno, lets users generate music by just providing the lyrics. One user provided the following lyrics to make a metal song:

I confess, I don't know too much about generating music with AI. I'm not a very good musician, but I love working at making music myself. Just like I don't use AI to write my blog posts for me, I don't have any desire to use it to make music for me. I'd rather improve my own skills.

That's not to say that I don't think playing with things like Suno (generating the Dog song) is bad, I just have never gotten into it.

Video Generation

Generating a video is WAY harder than generating an image. If you think about it, a video is 24 images per second, where everything has to be consistent (movement, etc) between frames. On top of that, you need to generate correct corresponding audio.

While image generation has been really good for a couple of years, video generation had its 'ChatGPT Moment' in the last few months. Just like ChatGPT's widespread availability and strong capabilities led to its quick and widespread adoption, models like Veo 3 and Sora have led to widespread video generation adoption.

How far has AI video come? One classic test is to use the system to generate a video of Will Smith eating spaghetti. The following video compares the progress from 2023 to 2025. Note how much more realistic video generation has gotten.

You can do lots of cool stuff with modern AI generators. Someone in my lab decided to try out Veo 3 (Google's new AI video system), and use it to bring one of my original Lego creations to life.

AI Video Slop

If you thought AI image slop was bad, video is WAY worse. Just as social media has transitioned to short-form video content (think TikToks or Reels). This trained us to like short videos right as the technology came out to generate short videos. The same people who were creating AI image slop are creating video slop. Some of it is obviously slop:

However, much of it actually looks good. These videos appear to be fairly legitimate, but nothing in the video screams, "This is AI". As an internet user, you need to be wary of generated videos.

Energy Costs

Many people are very concerned with the energy costs associated with generative AI. If you're an environmentally conscious person who turns off the lights when they leave a room, you too may want to be aware of this as well. Using an LLM for a query takes about 3 watt-hours of energy. (About the same as powering one incandescent lightbulb for 3 minutes.) Generating an image takes about 30 watt-hours (Leaving on one lightbulb for half an hour, or charging your phone). Videos are way more, around 1 kilowatt hour (running a microwave for an hour) to generate a short video.

Honestly, you're probably okay to use LLMs, but you might want to generate images sparingly, and not create video unless absolutely necessary. Churning out AI slop videos isn't great for the planet, and it's probably not great for the internet either.

Conclusion

AI progress has been crazy these last few years. We all have tools at our fingertips that let us create text, audio, images, and even video of almost anything you can think of. This is super exciting, but we need to be careful - anyone can use AI for nefarious purposes. With a little bit of vigilance, and a lot of creativity, generative AI is a new frontier to explore.

What will YOU do with generative AI?