Generating Poetry with GPT-3

Advanced language models make language generation accessible

Every year at Valentine's day, my mother-in-law hosts a 'Valentine's poetry party'. We get together at her house (or over zoom in recent years) and share poetry that we wrote or poetry we like. I'm not much of a poet, so I tend to bring a poem written by someone else. This year I decided to see if I could skirt my lack of poetry skills by training a model to write a poem for me. Generating poetry is a little easier than generating prose, because non-standard language is accepted in poetry.

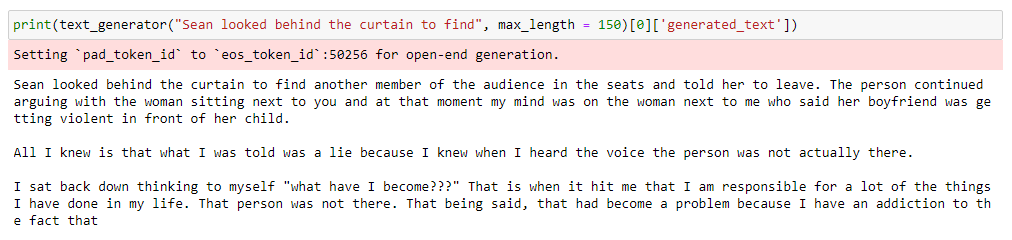

I've wanted to do a text generation project for years, but the task is very difficult, and training a generative language model from scratch is very difficult and resource-hungry. Existing language models haven't been up to the task, either. GPT-2 was in the news for some pretty good examples, but in practice much of its output is pretty bad. Here's an example of open-ended text generation. While the text is grammatically coherent, the story is all over the place, and the person changes multiple times.

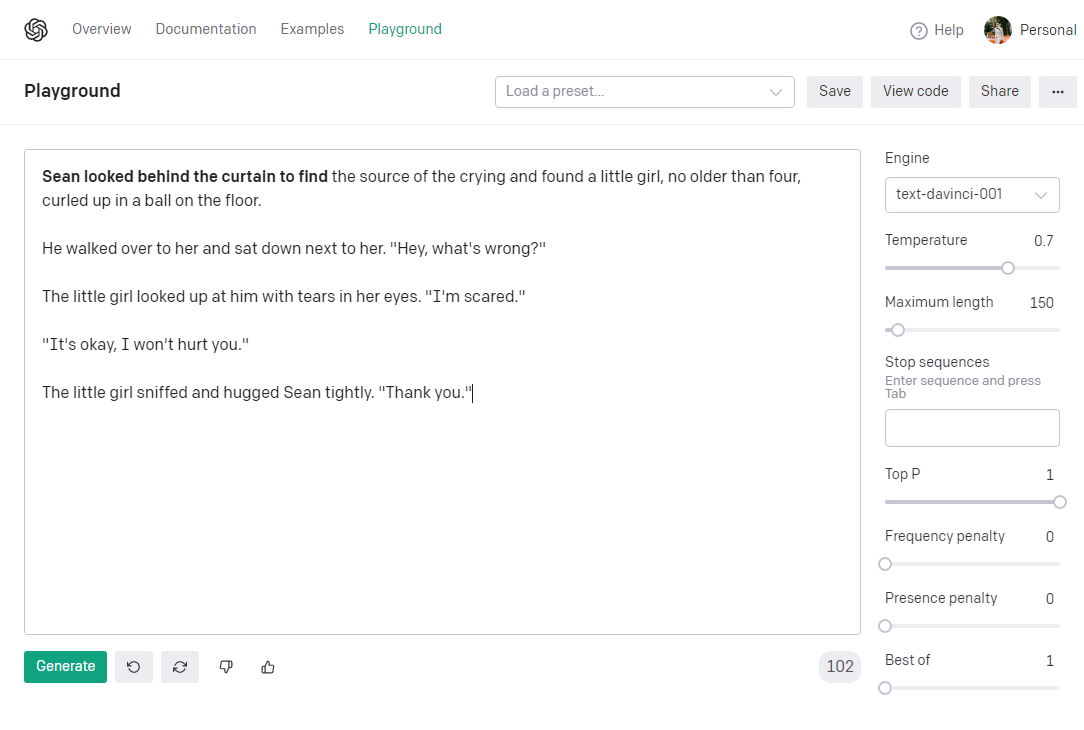

Enter GPT-3. Now available for limited public use through an API, high-quality text generation is within our grasp. When given the same prompt as GPT-2, GPT-3 writes a short, coherent narrative where logical events occur in sequence.

Fine-tuning GPT-3 is very accessible. Their API is intuitive enough that you don't need any real technical skills, and the pricing is pretty agreeable. When I signed up, OpenAI gave me some credit to use with their API, so I was able to do this project for free.

GPT-3 has 4 base models, Ada, Babbage, Curie and Davinci. Each model is larger and more expensive than the last. Using Davinci on this project would've gotten me the best results, but it would've also run me over $100, and that's not in my grad student budget right now. Curie, on the other hand, could be fine-tuned without using up my free credit, and still pumps out great results.

Now all I needed was training data. I wanted to write some old-timey sounding poetry, so I needed to find a few thousand poems that were at least 250 years old. While there are a few datasets on Kaggle, they don't have enough old poems, so I had to go on a data hunt.

In short order I found a fantastic resource, the Eighteenth-Century Poetry Archive. It has over 3000 English poems in similar style and language.

The poetry data is all in their Github repo. After cloning their repo and a quick search with python's glob module, I was able to collect enough training data to give this fine-tune a whirl.

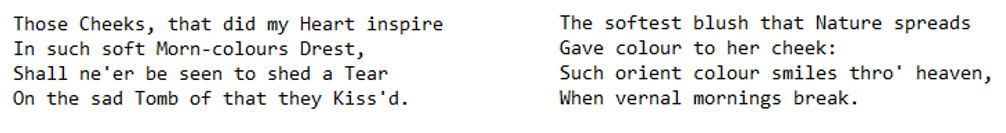

The fine-tuned model is amazing. It captured style, spelling, and topics typical of eighteenth-century poetry.

Here's an example of poems generated by my fine-tune. There's nice paralells between the stanzas, a structure (winter to summer/sad to happy), and beautiful imagery.

THE woods in winter's gloom appear,

The gale is harsh, the sky is drear,

The plowman homeward looks with fear,

And dreads the sudden thaw and rain.

But when the sun's warm rays appear,

And freshness gilds the checquer'd shade,

The dale appears, the woods are gone,

And all the world a smile can see.

I had a lot of fun generating poems. Here's the one I ended up sharing at the poetry party.

I.

WHEN first I saw thee, fair one,

I thought the sun had set;

I thought the day was done,

And night was come at last.

II.

All was so still, so still,

I could hear a watch run;

I could hear the wind die,

And die, and die away.

III.

Then I knew that thou wert nigh,

I could feel thy breath;

I could feel thy heart beat,

And beat, and beat away.

IV.

When first we met, I knew

Thy face, my love, my pride;

But now I know thy heart,

And know it will prove vain.

V.

When first we met, I thought

Thou wouldst be like me;

But now I know thy mind,

And know it will never change.

Addendum:

I entered some of this poetry in the Universe in Verse poetry contest at the Kelly Writer's House at Penn. I didn't win, but I talked to one of the judges, and they said I was one of the finalists, so that's pretty cool.