Generating Piano Music

We've seen Language Models used in a lot of text applications, but they can be used for more than just modeling language.

Language Models

If you read this blog regularly, you've certainly seen me mention language models. As a quick refresher, language models are built on the idea that natural language follows a distribution that can be modeled. (Natural language refers to a language that people speak like English or Japanese) A simple and ubiquitous example of a language model is one used on the predictive text feature on your keyboard. Large Language Models (LLMs) like ChatGPT are able to model language so effectively they learn how to do tasks like math.

Language models are so capable at approximating natural language that researchers have experimented with using them in other contexts. For example, langauge model architecture has been used to model protien structure. I don't really care about proteins, however. I'm interested in modeling music.

Modeling Music

In order to model anything using deep learning, the first thing we need is a bunch of data. Since we're trying to teach our model to write piano music, we need a bunch of piano music.

There are a lot of ways of representing music. While many cultures have invented ways of recording music, starting about 3,000 years ago. Sheet music as we know it was invented around 1,000 years ago. Recording audio in a physical medium (like a wax cylinder or a vinyl record) is a little over 100 years old. Digital audio recording is around 50 years old. You've probably interacted with different ways of storing digital audio, like .mp3 and .wav files. These files contain digital representations of an audio recording. These can be difficult to turn into something text-like to model with a language model. While there have been interesting applications teaching different machine learning models to generate raw audio from audio examples, we're interested in symbolic music generation: creating a symbolic representation of music (like sheet music).

Thankfully, there's a popular file format called Midi. Unlike other audio files, midi files contain a set of instructions on how a device (like a computer) should play a song rather than a recording of a song being played. It may be helpful to think of midi files as a sort of sheet music for computers. Because midi is a ubiquitous file format with a history of over 35 years, there is an abundance of midi data on the internet. Just like LLMs were enabled by the massive amount of freely available text online, there are enough midi files for us to train a music model.

The Data

We collect roughly 23,000 piano songs from 4 midi datasets:

- MAESTRO, containing 1200 midis transcribed from piano competition performances.

- The GiantMIDI-Piano dataset, comprising 10,000 classical piano midis.

- The relatively small Tegridy-Piano dataset of 233 songs.

- The adl-piano-midi dataset of 10,000 piano covers of modern songs extracted from the LAKH midi dataset.

These four datsets cover everything from Bach's tocattas to La Macarena; White Christmas to an Eminem piano cover. It would take over 3 months straight to listen to the whole dataset.

Tokenization

We talked about tokenization in the last blog post. As a reminder, tokenization takes something a machine learning model can't understand (like text) and turns it into something the model can understand (like numbers). What's different about modeling midi instead of natural language is that we need a two-part tokenizer. First, we need to turn our midis into text. Then we use the same tokenization techniques as last time to transform that text into numbers.

So how do we turn our midis into text? Thankfully, someone else has already figured that out. Multiple people, in fact. There are several midi tokenizers implemented in a convenient python library, MIDITok. After reading a bunch of papers, I decided to use the REMI tokenization system.

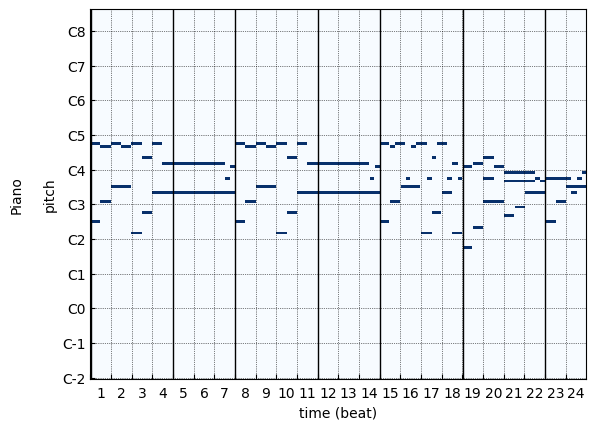

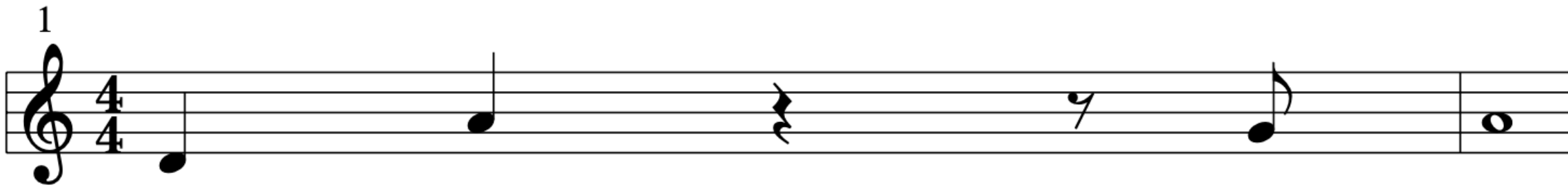

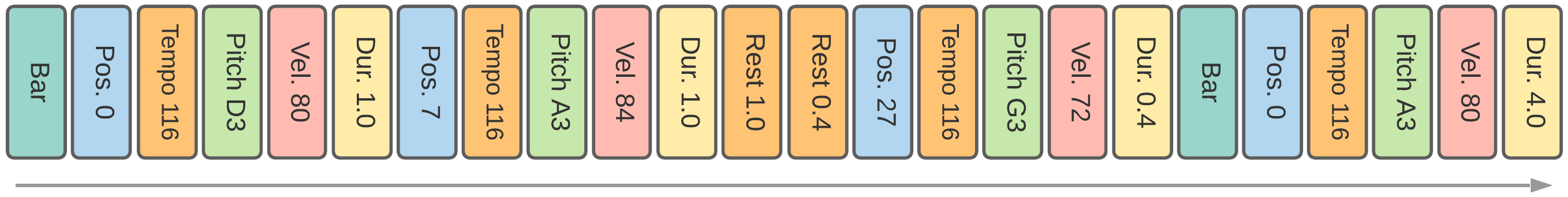

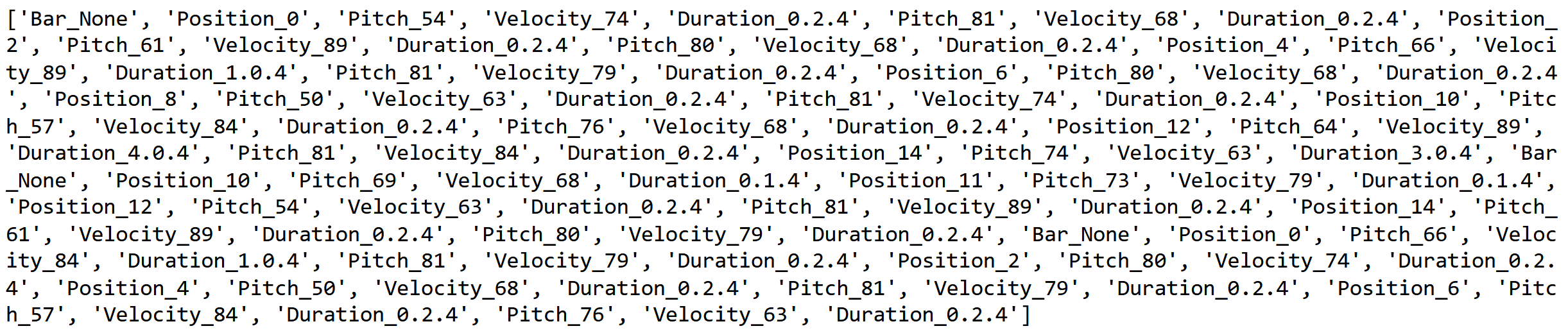

So the REMI text corresponding to the pianoroll from earlier looks like this:

There are some additional things to take into consideration while tokenizing the data. In normal text, the reader is free to read at the pace he or she chooses. However, midi instructions are very specific, not only to the overall tempo of the song, but also to the exact duration of each note. This can cause big problems for a machine learning model. It might not learn that a short note at a fast tempo is the same as a longer note at a slower tempo. It also can cause problems because a short note that lasts 13 milliseconds and another short note from a different song that lasts 15 milliseconds are essentially the same. However, the model can have difficulty generalizing (understanding that slightly different things that are similar should be treated the same way). In order to fix this problem, we quantize the notes as part of our tokenization process. This means we 'round' the note durations so the model learns a manageable number of note lengths. Similarly, we get rid of really high or really low pitches so the model learns a manageable number of pitches. Finally, we quantize the note velocities (volume) into a manageable number as well.

Training a Music (Language) Model

Training our music model is relatively straightforward. We use the same Transformer architecture as GPT-2 (a model we discussed last post as well). After creating a GPT-2 model with randomly initialized weights (it doesn't know anything), we train the model (using the huggingface library) on all of our nicely tokenized data. Since I have access to the BYU supercomputer, this can be done in under an hour.

Why do we think a transformer-based language model architecture is a good choice for this task? The answer is in something called self-attention. At each transformer block, the model does something called multi-headed self-attention. What that lets the model do is learn dependencies between tokens in the sequence. In the case of text modeling, it lets the model learn the relationships between entities. For example in the sentence: Sam went across the street to his house. the word Sam attends strongly to the verb went, and to the word his (because Sam is the he in this case).

Just as self-attention helps a normal language model to learn pronoun reference, in the case of music we can leverage self-attention to create harmonies or repeat musical motifs.

Results

The first model I trained did not do too well. Here are a few examples:

Obviously not great. The music sounds rather like a child slamming on the keys, either in big, ugly chords or in rapid taps. Additionally, lots of the generated songs had long pauses either in the beginning or middle of the pieces. I was really disheartened by this result so I did some analysis to figure out what was going so wrong. I realized a few issues that were contributing to this messy-sounding music.

The first problem was with my quantization I metnioned earlier. I needed to chunk the tempos and note lengths into fewer, broader chunks. While this could hurt expressiveness, that was a small price to pay for the model not to sound erratic, and let it learn rythmic structure.

One huge issue is that I didn't have enough data to train my model. My first model was trained on about 11,000 songs. According to a very infulential paper on neural scaling laws, that's nowhere near enough. I was able to find some more datasets (mentioned above) that doubled the amount of data the model saw. While it could still use a lot more data, this was a good start.

To address the issue of the long pauses, I looked at my dataset and realized many of the songs began with 4 bars of silence. While that may be good for a concert, it meant that the model was learning it was a good thing to pause for 4 bars all the time. I wrote a program that went through all 23,000 files and replaced any long leading silences with just one bar.

I trained a second model with these improvements and boy did it sound better. It still wasn't what I'd hoped for, but the improvements were easy to see.

This still doesn't sound like good piano music, but at least it's much more melodic. Still unsatisfied, I trained a new model with another round of improvements. I got rid of Byte-Pair Encoding (BPE) in the tokenizer. Many LLMs use BPE, where it helps performance. The exact details are beyond the scope of this post. A recent paper suggested it would help music models, but removing BPE really helped. At this point the model was sounding better, if still a little wonky.

This was much better, but I figured I could eke out a few more improvements. I increased the size of the GPT-2 model by a factor of 1.5. I would've liked to make it bigger, but in order to scale a model you also need to scale the data it's trained on. I didn't feel like I could make the model any bigger without increasing the amount of data.

At this point I had done four rounds of model training, and my model had gone from slamming on the keys in an erratic fashion to writing music that sounds pretty good.

Generation

Generating new tokens with an autoregressive language model is a tricky proposition. Think about your predictive text keyboard. If you just tap the middle suggestion, you can write a whole sentence, but it probably won't be very good. Additionally, it's deterministic, meaning that if you try to complete the same sentence several times, you'll get the same answer each time. If you want creative responses, this determinism is no good, and we are looking for creative responses.

To solve this, language models sample as they generate. That means instead of greedily picking the most likely next token (tapping the center button of the predictive text), they choose from several good top options. You as the user can control how randomly the model makes that selection with a parameter called the temperature. A low temperature example will be boring and repetitive, whereas a high temperature may generate confusing output. These temperature rules apply to our music model just like they would a language model.

Tempertaure Examples

I generated several examples with different temperatures to illustrate this:

Very Low Temperature

Slightly Low Temperature

Just Right (Goldilocks) Temperature

Too High Temperature

Really Too High Temperature

We can see a progression from boring to good to kind of crazy in these examples. It's important to realize all of these were generated with the same model, only fluctuating the temperature parameter. Tweaking one number at generation time drastically changes the quality of the output.

Conditional Generation

Since our Music Transformer is a language model, it behaves like one. Language models are best at generating when they are continuing something else. For example, when you interact with ChatGPT, your question or prompt lets it know how to answer. Similarly, we can provide our music model with a short musical prompt and have it continue the song.

There are a few evaluation criteria to determine if the model completes well. First, does it keep the same style? While we can't expect the model to guess how the original composer completed the song, we can expect it to continue the song in a stylistically appropriate fashon. Similarly, we want the model to keep the same tempo and key as the old song. If the prompt is a jolly jig and the model switches to a slow dirge, it's not very good. In order to evaluate the model for consistency, I prompted the model with a short clip from three different pieces in three different genres: Pop Piano, Ragtime, and Choral.

Prompt: Yiruma-River Flows in You

Completions

Prompt: Pavel Chesnokov-Cпасение coдeлaл

Completions

Prompt: Scott Joplin-The Entertainer

Completions

Overall, that was pretty good. While not all of the completions were perfect, (I wanted you to get a feel for exactly what the model's capabilities are) the completions are consistent and tend to sound appropriate.

Conclusion

I'd been wanting to train a language model to generate music for about a year now. This project was pretty in-depth (it took me about 50 hours), and I'm happy at the quality of the model and its outputs. It's learned to write new piano music in a variety of genres, all from reading 3 months of sheet music. This last piece isn't exceptionally beautiful, but it really feels like music written by an AI.

If you have a little bit of technical know-how, you may want to try out the model at the Google Colab Example.