Generating Pictures of Me!

With Dreambooth, you can fine-tune a diffusion model using very few reference images.

Diffusion Models

Diffusion models are the state-of-the-art when it comes to AI generated images. Open-source models like Latent Diffusion and Stable Diffusion that can generate convincing images of anything are available to the public, making AI art more accessible than ever. For an explaination of how they work, check out my previous post in which I trained my own diffusion model from scratch.

That was great for training from scratch, but just like we've fine-tuned language models in some of my previous posts, we can leverage someone else's pre-training by fine-tuning Stable Diffusion. This model's pre-training means that instead of semi-abstract images, we should be able to get pretty realistic, grounded images in several styles.

Finetuning with Dreambooth

Finetuning a model allows us to take a model that's been trained on a generic task and use its general knowledge on a specific task. In the case of image generation, we start with Stable Diffusion, a model that gernerates high-quality images given a text input. Finetuning models can still be an involved process, requiring hundreds to thousands of examples to teach the model what to do.

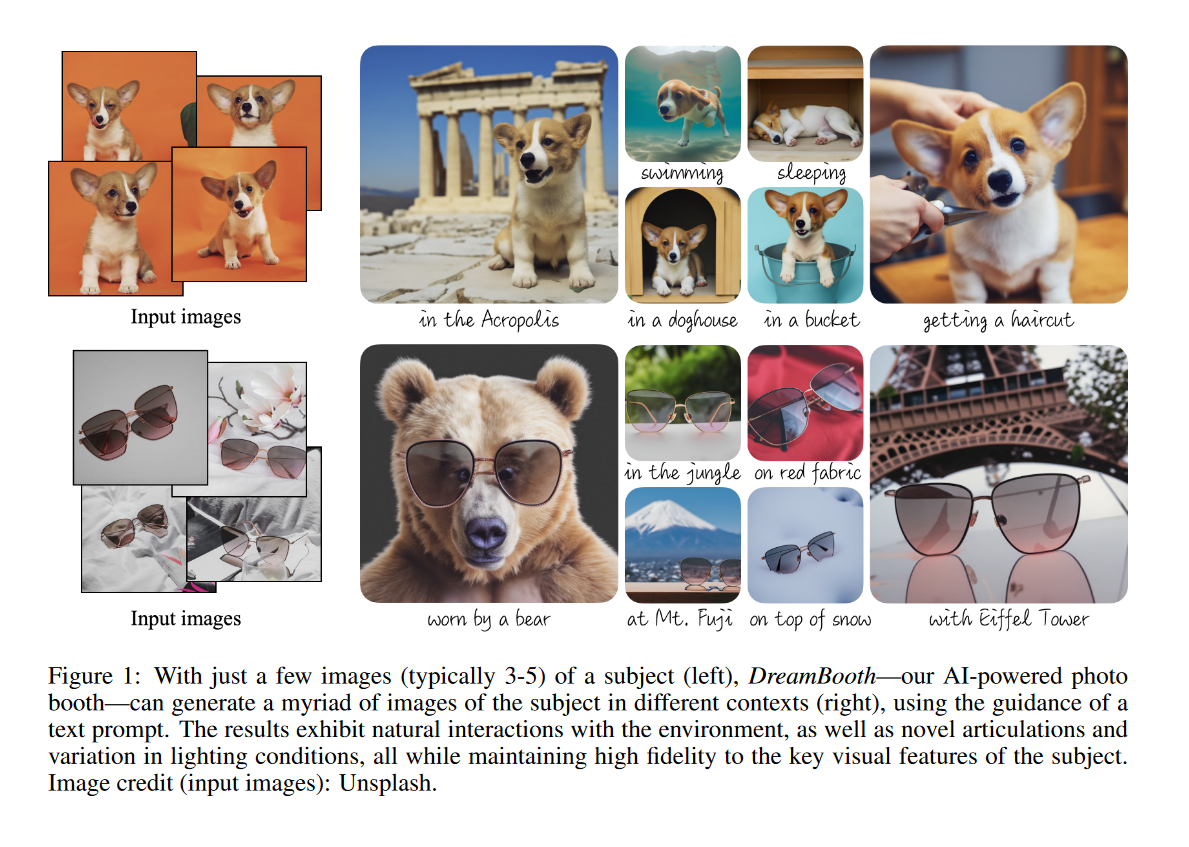

Enter Dreambooth. This technique, developed at Google Research allows us to fine-tune image generation models using as few as 5 reference images.

Computer Vision is not my area of expertise, but I'll try and give a brief explaination.

Dreambooth works by binding the pictures of the subject (in this case, me) to a unique identifier. This identifier can then be used to synthesize new pictures of me in new contexts, so long as I include the identifier in the prompt.

Prior Preservation

In order to teach the model the 'gist' of what we're going for, we first have it generate about 200 images of something like what we're trying to teach it.

In this case, I generated pictures of 'a guy in his 20s', which is what I am.

This helps the model to understand that I am 'a guy in his 20s' and tweak its understanding of 'a guy in his 20s' to me.

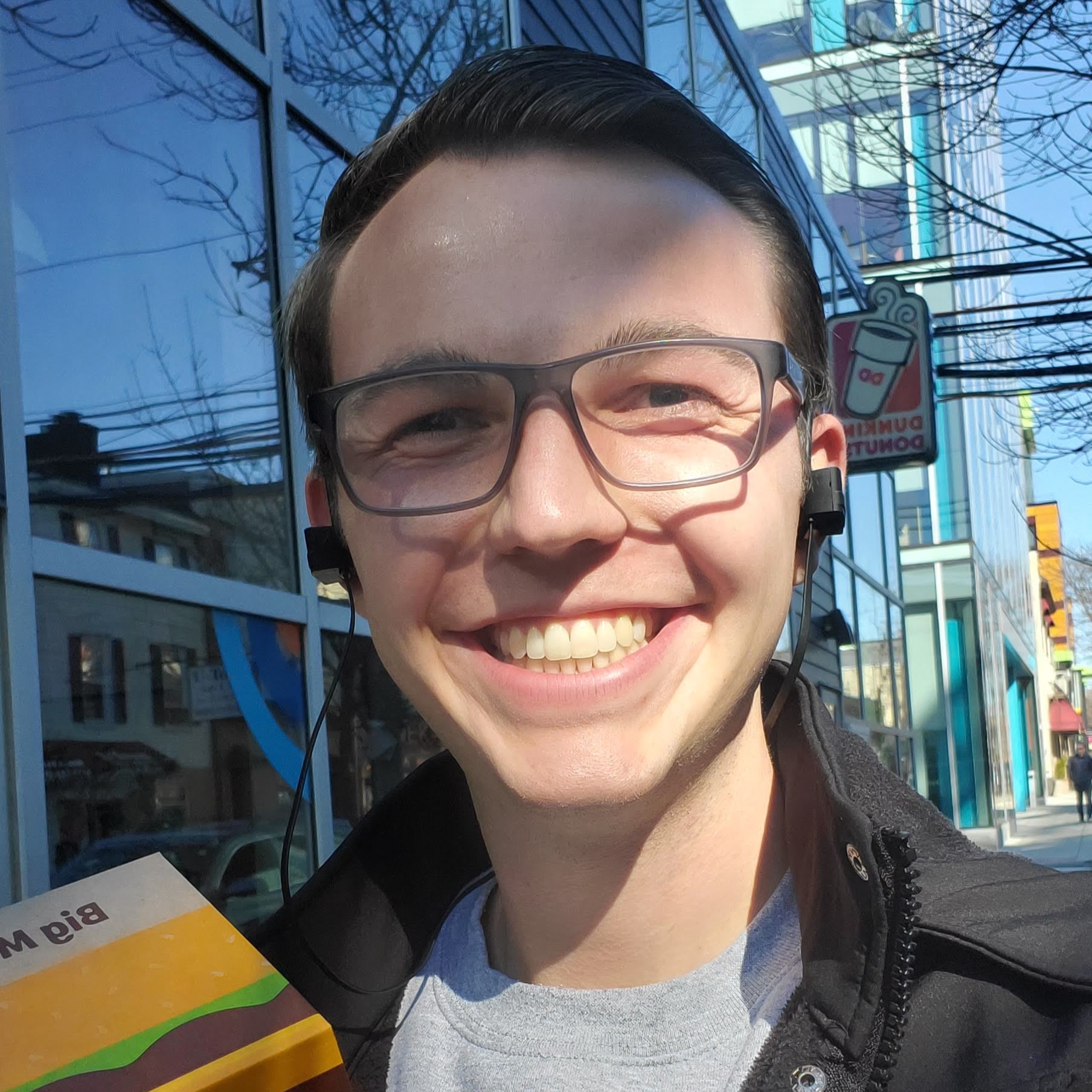

Training the model is straightforward. There's a nice example script provided by HuggingFace that you can tweak and use for yourself. I grabbed 8ish pictures of myself and went to town. The model took under an hour to train on an A100 GPU.

A major part of generating good images is prompt engineering. (For more on that, see my previous post on prompt engineering.) Essentially, in order to get the best output from the model you need to be very exact in your query. Interestingly, just asking for a better image usually works. Tacking on phrases like 'very detailed' or 'contest winner' will make your image better. Prompt engineering is a whole subfield with plenty of guides out there.

Results

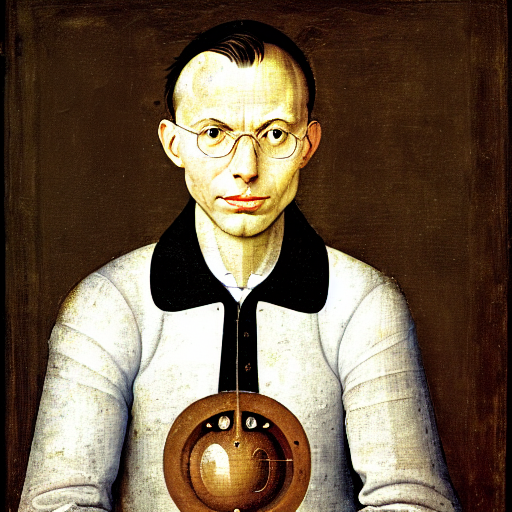

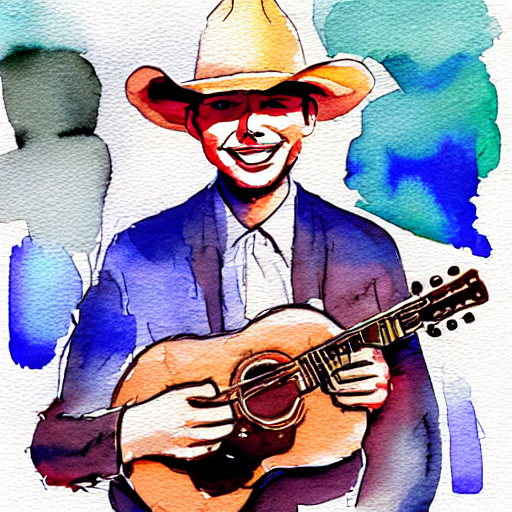

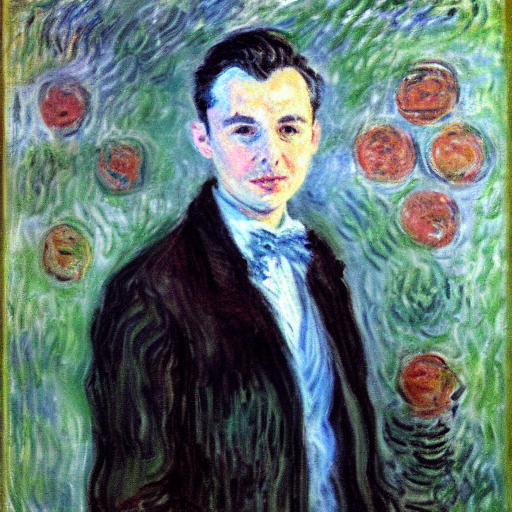

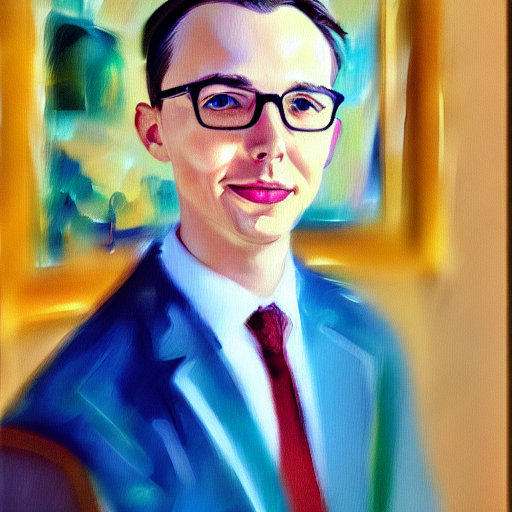

Right off the bat, we can see some great results. The model learned what I look like, and can put me in tons of cool contexts.

It can change my age, clothing, and even animation style.

Problems

But it's not perfect. I noticed that about 25% of the time the model would make me look vaguely to moderately Asian, and sometimes give me a beard.

After some investigation I realized the problem was with my prior preservation images. It turns out the prompt 'photo of a guy in his 20s' has a lot of bearded men and a lot of men of east Asian descent.

Solution

If the problem is as simple as bad prior preservation images, it shouldn't be hard to solve. I tried again with the prompt 'photo of a white guy in his 20s, clean-shaven'. That solved the issues with the beard and the race-shifting, but then a new issue cropped up. Now I wasn't wearing glasses. The prompt 'photo of a white guy in his 20s, clean-shaven, glasses' solved that.

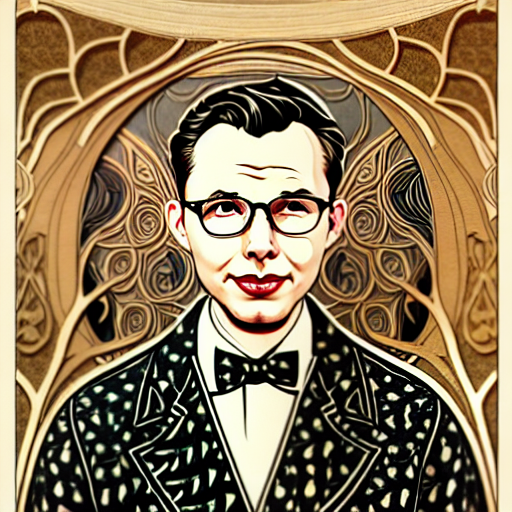

With good prior-preservation images and a few tweaks with training hyperparameters, I finally have a model that does exceptionally well at generating pictures of me. Every so often my glasses come off or the image comes out wonky, but with a few tries I can be anything.

Final Thoughts

Combining Dreambooth and Stable Diffusion was very simple and very cool.

It's exciting to see my AI image progress.

In the course of six months, I went from playing with DALL-E mini to training my own diffusion model from scratch, and now fine-tuning for model personalization.

I can't wait to see what's next.