MTGPT-2

I trained GPT-2, a little old language model, to generate Magic: the Gathering cards.

Back in 2022, I wanted to use language models to generate Magic: the Gathering cards. I trained a bunch of models including statistical models and LLMs, did a bunch of crazy stuff, and never finished the project/never wrote the whole thing up.

In order to generate the text for the cards, I fine-tuned GPT-3 on all of the Magic creatures that existed at the time. That was okay, but I didn't have ownership of the models I fine-tuned. If I wanted to generate a new card, I had to pay OpenAI a fraction of a cent each time.

It's not 2022 anymore. In the last three years several things have happened to make this project much easier:

- I have gotten better at Machine Learning. I finished my Master's and am two years into a PhD.

- I have free access to a bunch of compute through my research lab.

- They've made a LOT of new Magic cards. The number of cards today is much greater it was in 2022 (30,000 instead of 20,000).

AI and Creativity

Now, this entire project is entirely redundant to some degree. I am confident that if you ask ChatGPT to generate a new Magic card for you, it will do a technically great job. ChatGPT has read basically the entire internet, including Magic strategy, Magic cards, Magic fanfic, etc. It knows more about the topic than almost anyone. But there's nothing satisfying about asking an LLM to do something creative for you. On the other hand, training my own model is a creative act in itself. The base GPT-2 models I'm using know very little about Magic: the Gathering. I'm teaching them, and I feel some ownership of the result. The point of this project isn't to make the best Magic card generator that could possibly exist, it's to make my Magic card generator. When I'm done, I will have the trained model, not OpenAI. I can use it without paying someone else to use their model. While you may have interacted with the original ChatGPT (3.5), or GPT-4 or GPT-5, these are all closed models. That is, while you can interact with them, you can't download the models for your own use. GPT-2 was the last time OpenAI was actually open.

On top of all that, there's something neat about using a less-capable model for a task like this. GPT-2 was trained in 2019, and this would have been cutting-edge back then.

You may have noticed something similar with AI art. Early AI art was technically much worse than current AI generated images. However, the limitations gave them more 'soul'. For example, Edmond de Belamy, the first AI artwork sold at auction, was created in 2018 with rudimentary image generation. While modern image models can make a picture of anything that looks WAY better than that, maybe it's not as artful.

This Magic card generation system uses GPT-2 models from 2019, about the same era as Edmond de Belamy. I'm hoping that the models' limitations might inject something surprising into the final product, like the artfulness from limitations in Edmond de Belamy.

Magic Cards

Magic: the Gathering is a fantasy card game, and the original Collectible Card Game. In the game, two or more players take on the role of wizards who battle each other by casting spells and summoning creatures. (I know it's nerdy) Created in 1993, MtG is wildly successful. Counting all languages and artworks, there are about 90,000 different cards. It is estimated that over 50 billion Magic cards have been printed throughout the game's history.

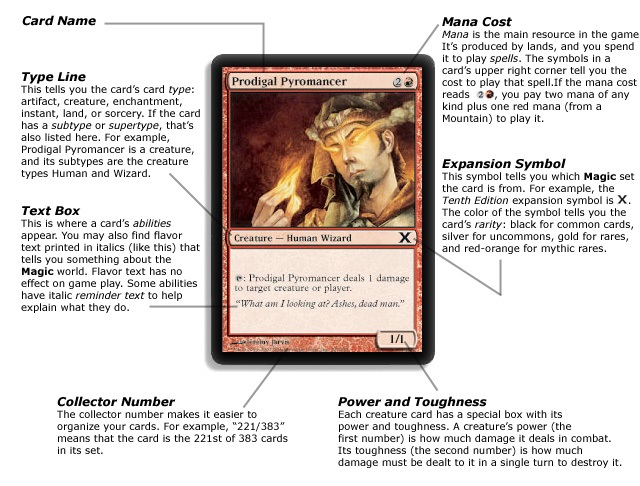

I started playing Magic with my friends in 2008, which means I have a 17-year history with the game at this point. I've used LLMs before to do Magic-related projects. In order for you to understand this project, you need to understand Magic cards a little bit, so here's a card explanation from some old publicity materials. (I used this image in an old post once, which is why it might look familiar to you).

Colors

In Magic, there are 5 colors of magic, White, Blue, Black, Red, and Green.

Each of these colors is produced by a different type of land (the resource in this game), and each color represents a different kind of magical power.

Each color has specific strengths and weaknesses, as well as ideological values-- In other words, color will be important to our task of generating Magic cards. Here's a really short explanation of each color (this will be helpful to know later once we make some cards).

- White represents order and community, building large armies of small creatures and using protective magic to control the battlefield and enforce its laws.

- Blue embodies intellect and the pursuit of perfection, controlling the game by drawing extra cards to gain knowledge and canceling an opponent's spells before they can even take effect.

- Black is the color of ambition and amorality, achieving power by any means necessary, whether it's destroying opposing creatures, reanimating the dead, or sacrificing its own life for a winning advantage.

- Red channels passion and chaos to act on pure impulse, overwhelming opponents with fast, aggressive creatures and spells that deal direct damage to any target.

- Green is the color of nature and growth, embracing the law of the jungle by accelerating its resources to summon gigantic, powerful creatures that overpower the opposition.

Making Cards

Now, you have an idea of what I was shooting for, and what a Magic card looks like. This semester, I'm taking my final class of my PhD. As part of this class, we have small(ish) projects every two weeks. One of the projects is the 'text generation project'. The project description is both short and vague:

Select and code a project involving text generation using transformers. Try your project with several different text generation models. Write a report to explain your project. Include your code and explanations of how you approached and solved the problem.

Well, fine-tuning a transformer-based language model to generate Magic cards technically fulfils the requirements of this project, which is why I decided to try this again.

Because I'm doing this for a class, I didn't even go back and look at my old code from when I tried this back in 2022. I wanted this to be 100% new for academic honesty reasons, so I started from scratch. Also last time, I decided to simplify the task as much as possible, and my model could only make creatures, which is only one type of Magic card. This time, I wanted to include all of the main types of Magic card.

Once again, I'll need to help you understand all the card types, so here's a short explanation.

- Creatures are magical beings and warriors that remain on the battlefield to attack an opponent's life total and defend against incoming attacks.

- Lands are the foundation of a player's resources, played once per turn to produce the magical energy required to cast other cards.

- Artifacts are magical objects, equipment, or constructs that remain on the battlefield to provide powerful abilities and are usually colorless, allowing them to be used in any deck.

- Instants are fast-acting spells with a single, immediate effect that can be cast at any time, providing the flexibility to react to an opponent or play a trick during their turn.

- Sorceries are powerful spells with a one-time effect that can only be cast during a player's own turn, often with a greater impact than instants to reward their deliberate timing.

- Enchantments are persistent spells that remain on the battlefield to create long-lasting magical effects, altering the rules of the game or bestowing new powers upon other cards.

- Planeswalkers are powerful summoned heroes who fight on the battlefield, using one of their multiple unique abilities each turn until their loyalty is depleted by enemy attacks.

Review of Pretraining and Fine Tuning

I've been over this before, but as a quick reminder - models are often pretrained on a variety of data. This imbues them with a sort of 'background knowledge'. Then, people can fine-tune these models on a specific task. In this way, the model can leverage its pretrained background knowledge and learn how to do something specific very quickly. This is called Transfer Learning.

For example, an image model might be trained to learn a bunch of different types of objects (planes, dogs, cats, boats, etc). Then, you can fine-tune that model to differentiate between real bears and teddy bears. Even if the model has never seen a picture of either type of bear before, it will learn reasonably well how to tell the difference after being fine-tuned only on a relative few bear and teddy bear pictures. If you were to try and train a model from scratch on the same number of bear pics, it would fail.

LLMs are pretrained on billions or trillions of tokens (words) of text. As a consequence, they already understand the grammar of English. They also have some amount of world knowledge, all from their pretraining. Hopefully, we can leverage this to get pretty good Magic cards. If we were to try and train a model from scratch on only 30,000 Magic cards, it would do much worse because in addition to learning the rules of Magic, it would also need to learn the rules of English.

Modeling Anything as Text

Modern autoregressive LLMs are really good at generating text. If you can turn something into text, an LLM can probably learn the task pretty well and pretty quickly. (For example, you might remember when I trained a language model on text representations of music and it was able to generate new music.)

So if I can turn Magic cards into text, I should be able to fine-tune a model on that text, and make my own cards. How do I do this?

The Data

Scryfall provides bulk data of all of the Magic cards in JSON format (a common way to save data). Here's a truncated example JSON card:

{"object":"card", "id":"6b7baaa7-67f2-4902-a4c1-761d58cce1d4", "name":"Mine Security", "lang":"en", "released_at":"2025-08-19", "layout":"normal", "image_uri":"https://cards.scryfall.io/small/front/6/b/6b7baaa7-67f2-4902-a4c1-761d58cce1d4.jpg?1755524933" "mana_cost":"{1}{R}", "cmc":2.0, "type_line":"Creature — Kavu Soldier", "oracle_text":"Trample\nWhen this creature enters, conjure a card named Flametongue Kavu into the top eight cards of your library at random. It perpetually gains \"You may pay {0} rather than pay this spell's mana cost.\"", "power":"3", "toughness":"1", "colors":["R"], "collector_number":"16", "digital":true, "rarity":"uncommon", "artist":"Xavier Ribeiro"

We don't need most of this information for our project If we think back to the card picture above, we only need to keep a few of the fields:

- The card's name

- The card's cost

- The card's type line (that line in the middle that says what the card does)

- The card text

- If the card is a creature, the card's power and toughness

- If the card is a planeswalker, the card's loyalty

That's only 4 or 5 things, and they're all between a couple of words and a few sentences. Not bad!

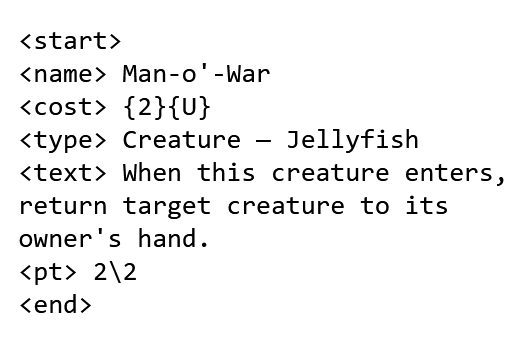

So how do we turn the cards into text? LLMs often use special tokens to help the model learn tasks. This isn't necessary anymore for today's huge models like ChatGPT and Gemini, but since I'll be training smaller, older models, this will be super helpful. I've defined a few tags to put the cards in an easy text format.

<start>, <name>, <cost>, <type>, <text>, <pt>, <loyalty>, <end>

These provide a strong signal to the model of what field they're generating. They also provide a nice output format for us humans to read. We use the s<start> and <end> tags to determine where the card begins and ends. Each tag is in a new line, so the model can learn that the end of a line means the next part of the card.

We also turn the symbols on the cards into text versions of the symbols. For example, a red mana symbol (That thing that looks like a red circle with a fire in it) is encoded as {R}.

Also, we want the model to learn that each new line is a new part of the card. To make that easier, when a card has multiple lines of text, we put them all on the same line. The text is functionally identical, but is reformatted to help the LLM.

So what does that look like in practice? Here's a card as both image and LLM text:

In 2022, I used separate statistical models to generate the mana value, power, and toughness. However, LLMs can learn numerical relationships. This time, I decided to hope the model can learn to generate the numbers and costs themselves. What numerical relationships are we hoping to learn?

In Magic, creatures' average power and toughness is usually roughly equal to their cost. A 2/2 creature usually costs 2 to play. Similarly, if a card deals 10 damage to a player (half their life total), that card should cost a lot to cast (like 7-10). We're hoping that our models can learn these numerical relationships from the training data.

Filtering the Data

We can apply this pipeline to our whole dataset, but first we need to filter our data. Magic's been around for over 30 years, and in all that time, they've tried a lot of things. There are double-sided Magic cards, cards that are two cards, and cards that you need to turn around. All of these would cause problems for us, so we filter all of these cards out.

Magic cards are often reprinted, sometimes with new art or different borders. We don't want to bias our card generator towards cards that have been printed multiple times, so we only use one printing per card name.

As of October 2025, there are 35778 unique Magic cards. Filtering out the wonky ones leaves us with 31023. That's a good amount of training data!

Training the Model(s)

With all of our data ready, we're ready to train some models. In general, we know that larger models do better than small ones. There's a tradeoff here, where larger models are harder to train and harder to use.

I decided to train models of different sizes, benchmarking how long they take to train and how well they do.

Before training models, you need to know how you'll evaluate them. You need to know how good the model is to see if your training was successful. How did I decide to evaluate the models?

Creating a Metric

I decided to create a four-part metric to evaluate the generated cards. Once the model is trained, I generate 10 random cards and manually evaluate the generations. After averaging over the generations, we know how good the model is.

The 4 parts are:

- Originality: Does the generated card already exist?

- Game Rules: Does the text on the card work in the game of Magic?

- Colors: Does the card's effect match its color?

- Numbers: Do numbers on the card roughly correspond with the cost?

Each of these is scored on a three-point scale, Bad, OK, or Good. Then we average across the 10 random generations.

Metric in hand, we're ready to train and evaluate some models. To start, I train all of the GPT-2 models on the dataset. These models are different in size (number of parameters), which has a large bearing on performance. We train each model for 3 epochs, meaning we show it all of the training examples 3 times. I have access to a really nice machine with 8 A100 GPUs, so the models all train quite fast.

| Model | Time to Train | Number of Parameters |

|---|---|---|

| DistilGPT2 | 2 minutes | 82 million |

| GPT2 | 3 minutes 15 seconds | 124 million |

| GPT2-Medium | 5 minutes 45 seconds | 355 million |

| GPT2-Large | 13 minutes 30 seconds | 774 million |

| GPT2-XL | 27 minutes | 1.5 billion |

Generation

Modern Language Models (even the GPT-2 models I'm using) generate text autoregressively. In other words, they look at the previous words, and generate one word at a time. They repeat this process over and over again, resulting in a text. But how do LLMs decide what the next word should be?

When Language Models generate the next word, they don't just come up with a single word. They actually come up with a probability for each word they could possibly say. Then, they choose one of those words. The simplest method for this is to just always choose the most likely word. However, this doesn't take advantage of all the richness in learning the model can do, and also leads to similar, repetitive outputs.

A better solution is sampling. With sampling, the model samples from the output probability distribution. More likely words occur more often, but it allows for variation. Sampling can be controlled with a parameter called temperature. (You might have encountered this while interacting with an LLM) Temperature controls the sampling, with higher temperatures increasing the randomness. We sample to generate our outputs, with a temperature of .95, which is a little more random, but not too much.

One effect of how we trained our model is that we can make the model generate specific cards by passing in part of a card and having it finish the card. For example, If I want to generate a card called Tower of the Apes, all I have to do is pass in:

<start>

<name> Tower of the Apes

The model will continue generating from there. When I pass that input into the fine-tuned MTGPT-2-xl, it spits out:

<start>

<name> Tower of the Apes

<cost> {1}{G}{G}

<type> Artifact

<text> {1}, {T}: Target creature gets +1/+1 until end of turn. Activate only once each turn.

<end>

That seems good! The output is well-formed, the rules work, and what the card does makes sense.

Evaluation

We evaluate our models using the metric from earlier. The following table shows how well each model performs on our metric as defined above. Surprisingly, all of the models do about the same.

| Model | Evaluation Score |

|---|---|

| DistilGPT2 | 57% |

| GPT2 | 55% |

| GPT2-Medium | 53% |

| GPT2-Large | 58% |

| GPT2-XL | 57% |

Bonus: Flavor Text

The most fun part of Magic cards, in my opinion, is the flavor text. This is italicized text on the card that describes something like the lore of the spell. Flavor text is sometimes funny, and sometimes pretty nerdy. It's also one of the more fun aspects of cards, in my opinion.

The keen observer will notice that the cards I generated didn't have flavor texts. There are a couple of reasons for this. When you fine tune a relatively small model like the GPT-2 models, you want to make sure that you aren't trying to give it too complex of a task. So when I was training the models to generate cards, I was trying to get them to learn how to format cards, learn numerical relationships between cost and effect, and make things you could actually use for gameplay. Trying to get the model to also write a sentence or two of lore is a very different task, so it makes sense to train a different model for that.

Also, not all Magic cards have flavor texts. Some cards have too much rules text for lore to even fit on the card. On the other hand, some Magic cards have different flavor text each time they are printed with different art. The dataset I used to train my card generator wouldn't really work for this project. I needed a different dataset for this task.

So what does the flavor text data look like? First off — I can use all of the weird double-faced and split cards that have flavor texts. It's okay if the card is complex as long as the flavor text is there. I got a different download from Scryfall, this one including every printing of every Magic card. Then, I filtered them by unique flavor texts. This dataset can have multiple versions of the same card as long as the flavor texts are different.

Also, you don't need to know every little thing about a card to write a flavor text for it. It's important to know the card's name, color, and rules text, but that's about all. I created this other dataset of 24900 cards with unique flavor texts, and trained each of my GPT-2 models on it as well.

Flavor Text Evaluation

Are the flavor texts any good? We want them both to make sense with the rules text, and sound like a Magic card. So a red card that deals damage might have a flavor text about flames burning someone. A black knight might have some dramatic, dark text. We can test this easily. All we have to do is pass in a black knight, and see what the flavor text model completes.

So I made up a black knight character

<name> The Dusk Knight

<color> B

<type> Legendary Creature — Skeleton Knight

<text> Deathtouch

Let's pass it to the flavor text generator to see what the model gives us back.

We can try a few times to get back a few options.

- One day she rose from her grave, and in her wake a terrible host of the dead rose with her.

- Death, the shroud of a thousand dreams; and all the dead are my army.

- No one knows how her ghostly form passed from the grave, but she will not rest in peace.

Those are pretty good. They're relevant and fantasy-ish, but not quite perfect. We said The Dusk Knight is a skeleton, so she probably shouldn't have a ghostly form. Maybe a skeletal form would be better. Overall, not bad though.

Or, we can see how we did with our Tower of the Apes card form earlier. Let's generate a few options again.

- The apes had no patience for humans.

- Though the apes ruled the forest, they could never contain it.

- "May the apes devour you and all your books. May the apes devour you and your thoughts." —Mogat, *Lament for Progress*

That last one was really funny to me, really capturing the old Magic card vibe.

The Full Pipeline

With all of these models trained, it's super easy to generate a new card. First, we use the card generation model to make the main text. Next, we filter that text and feed it to the flavor text generator. We tie that all together, and we have a full card.

Now I could train a model to generate images for the cards (I've done it in the past). Even better, I could use a top-tier modern image generator, and generate something very impressive. But that's like asking ChatGPT to make a card for me — not the point of this project.

Let's generate a few full cards, and see how they look. I generated a couple of cards, complete with flavor text, with each model. All I did was give the model a <start>, and let it design the whole card. Then, I passed that generated card through the flavor text generator.

But that's not all. I wanted to make the cards look more like Magic cards, and less like a wall of boring text. I wrote a python script that would call my first model to generate a card, then call the flavor text model to write it a flavor text. Then, I would pop that text into a Magic card frame. I wanted some placeholder art for the cards, so I drew some custom drawings in Microsoft Paint for the cards. The model that generated the card is in the bottom in the artist credit field. Here are several random cards, generated by all of the different models:

Sample Random Cards

Discussion

Overall, these experiments were successful. I was able to train a couple of models and they create actual Magic cards you could play with. Not only were the models able to learn the formatting of my card prompts, they also did a pretty good job of learning aspects of the game including colors, numbers, and other gameplay mechanics. The models struggle a little bit with originality, but overall that's not a huge problem. We never once had the models generate an existing card, although some names are copied.

More exciting than the ability to generate cards from scratch, the models can generate plausible cards given a name. For fun, I decided to use my professor's name to generate a few cards. (For the record, my professor is Quinn Snell.) I just made the name a little bit fantasy-ish. What's cool is that the model always knows to make this a legendary creature, the proper Magic designation for a named character.

Sample Seeded Cards

Overall, this was a really fun project, and the models turned out great. While not all of the cards are 100% game-accurate, I'm proud of them.

Magic: The Gathering, its card images, and all associated intellectual property are the property of Wizards of the Coast.